Let’s derive the evidence lower bound used in variational inference using Jensen’s inequality and the Kullback-Leibler divergence.

In Bayesian inference we are often tasked with solving analytically intractable integrals. Approximate techniques such as Monte-Carlo and quadrature will work well when the variable being integrated over is low-dimensional. However, for high-dimensional integrals, practitioners often resort to Markov chain Monte-Carlo or variational inference (VI). In this article, we will cover the latter starting from the very basic primitive results encountered in high school or an undergraduate science degree.

Background

In this section we’ll briefly highlight the preliminary results required for understanding VI. The aim here is to firstly establish notation and refresh readers of certain results. If these results are unfamiliar to the reader, then an introduction to Bayesian statistics, such as the Bayesian Data Analysis book may be a more suitable starting point.

Bayes’ Theorem

The cornerstone of Bayesian statistics, Bayes’ theorem seeks to quantify the probability of an event, conditional on some observed data. The event under consideration here is arbitrary and we simply denote its value by . We further state our prior belief about the value of through a prior distribution . Our observed data is denoted by . Together, Bayes’ theorem can be expressed by

We'll expand more on this when we get to the topic of VI but, for completeness, we call the likelihood and the marginal likelihood.

Expectation

In , is a random variable with associated density . Whilst we place a prior belief about the density of , this, in practice, is almost certainly not the true underlying density of . Assuming is a continuous random variable, the expectation operator is defined as

The expectation operator is also linear. This means that if we have a second random variable , then the following identity is true

Jensen’s Inequality

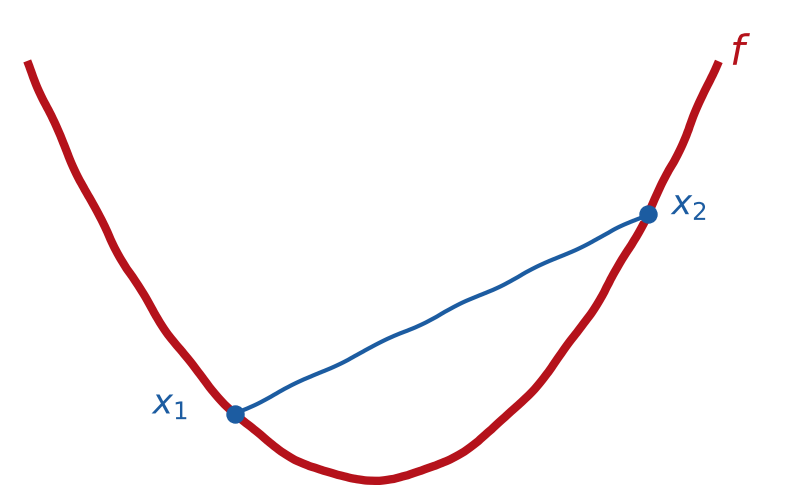

For a convex function , Jensen’s inequality states the following inequality

Informally, a convex function is a u-shaped function such that if we pick any two values and draw a line between and , then the line will be above the function's curve.

Motivation

With the necessary mathematical in tools, I’ll now motivate the use of VI through three cases where an intractable integral can commonly occur.

Normalising Constants

Bayes’ theorem in can be written as

The denominator here is simply the integral of the numerator with respect to our model parameters and its purpose is to ensure that the posterior distribution is a valid probability density function. However, can often be a high-dimensional parameter and the integrand may have no nice analytical form. As such, evaluating the integral can consume a large portion of a Bayesian's time.

Marginalisation

If we introduce an additional parameter that we’d like to obtain a posterior distribution for, then we now have the joint posterior distribution . Assuming we can calculate this quantity, then often wish to marginalise from our joint posterior which requires evaluation of the integral

Again, in the absence of an analytical solution, the evaluation of this integral will be challenging for high-dimensional .

Expectations

If we have our posterior distribution , then we may wish to evaluate the expectation of a function of e.g.,

Examples of such cases are for the conditional mean where and the conditional covariance .

Variational Inference

Hopefully by this point the need for techniques for evaluating high-dimensional and challenging integral is clear. In this derivation we’ll be considering the case of an intractable normalising constant which makes accessing our posterior distribution intractable. VI seeks resolve this issue by identifying the distribution from a family of distributions that best approximates the true posterior . denotes the parameters of and are known as variational parameters.

Typically, is the family of multivariate Gaussian distributions, but this is by no means a requirement. We use the Kullback-Leibler (KL) divergence to quantify how well a candidate distribution approximates and we write this as

There are two things to note here, 1) the KL-divergence is asymmetric (To see this, simply rewrite with and reversed) and 2) we have dropped the dependence of on for notational brevity.

We define our optimal distribution as the distribution that satisfies

Clearly this objective is intractable as simply evaluating the KL divergence term in would require evaluating - the very reason we have had to resort to VI in the first place. To circumvent this we introduce the evidence lower bound (ELBO). The ELBO, as the name might suggest, provides a lower bound on that we can tractably optimise as the KL divergence is always greater than or equal to zero. When , then clearly the ELBO is equal to the marginal log-likelihood.

We will now proceed to derive the ELBO in two ways: first using the definition of the KL divergence from and secondly by applying Jensen’s inequality from to .

KL Divergence Derivation

From we can rewrite the KL divergence in terms of expectations

By the linearity of the expectation, we now have the following

The final step relies on the face that is independent of and the expectation is simply a constant i.e., .

We now introduce the ELBO of , denoted as the following

Intuitively, we can see the ELBO as the expectation of our data's log-likelihood under the variational distribution summed against the KL-divergence from our variational distribution to our prior. When optimising this with respect to the variational parameters, the first term in will optimise to best explain the data, whilst the second term will regularise the optimisation with respect to the prior.

Through , we can see that

Observing that the KL-divergence is greater than or equal to zero, then the ELBO is a bound as

with equality being met if, and only if .

Jensen’s Inequality Derivation

The ELBO in can also be derived using Jensen’s inequality. We’ll proceed in a similar fashion to above by giving the derivation in full with underbraced hints.

At this point we are done in that we have applied Jensen's inequality to get a bound on the marginal log-likelihood. However, unlike the bound in , it is not immediately obvious how the result in can be practically used. To illuminate this, we'll rearrange the inequality a little more.

With a little rearranging, we can now close the loop and connect the ELBO derivation using Jensen's inequality with the earlier derivation in terms of the KL divergence definition.

Further Reading

For a much deeper look at VI, see the excellent review paper by Blei et. al., (2017).